Read the full article on DataCamp: LLaMA 4 With vLLM – A Guide With Demo Project

Learn how to deploy and use Meta’s LLaMA 4 Scout with vLLM on RunPod for both text completion and multimodal inference.

Overview

Meta’s latest models, LLaMA 4 Scout and Maverick, offer long-context, multimodal understanding, and efficient inference. Paired with vLLM, a high-throughput inference engine, you can deploy these models using OpenAI-compatible APIs on GPUs like H100.

In this tutorial, you’ll learn to:

- 🚀 Deploy LLaMA 4 on RunPod

- 💬 Run a local chat interface with multi-turn support

- 🖼 Perform multimodal inference (text + image) using vLLM

Why Use LLaMA 4 on vLLM?

vLLM is a high-performance LLM engine featuring:

- ✅ Efficient PagedAttention memory

- 🖼 Multimodal + long-context (up to 10M tokens)

- 🔄 OpenAI-compatible API

- 🧠 Supports multi-GPU scaling (tensor + memory parallelism)

Hosting LLaMA 4 Scout on RunPod

Step 1: Set Up RunPod

- Log in at RunPod.io

- Add $25+ to your balance

Step 2: Deploy a Multi-GPU Pod

- Select 4x H100 NVL GPUs (>=24 GB VRAM)

- Choose PyTorch 2.4.0

- Set Container + Volume Disk to 1000 GB

- (Optional) Add Hugging Face token for auto-download

Step 3: Connect to the Pod

Use either:

- JupyterLab Terminal

- SSH or HTTP

Step 4: Install vLLM and Libraries

pip install -U vllm

pip install transformers accelerate pillow

Step 5: Launch LLaMA 4 on vLLM

VLLM_DISABLE_COMPILE_CACHE=1 vllm serve meta-llama/Llama-4-Scout-17B-16E-Instruct \

--tensor-parallel-size 4 \

--max-model-len 100000 \

--override-generation-config='{"attn_temperature_tuning": true}'

✅ Your API is now running on port 8000!

Text Completion With LLaMA 4 Scout

Step 1: Install and Import SDK

pip install openai colorama

from openai import OpenAI

from colorama import Fore, Style, init

Step 2: Initialize OpenAI-Compatible Client

init(autoreset=True)

client = OpenAI(

api_key="EMPTY",

base_url="http://localhost:8000/v1"

)

Step 3: Start Chat Interface

messages = [{"role": "system", "content": "You are a helpful assistant."}]

while True:

user_input = input("User: ")

if user_input.lower() in ["exit", "quit"]: break

messages.append({"role": "user", "content": user_input})

chat_response = client.chat.completions.create(

model="meta-llama/Llama-4-Scout-17B-16E-Instruct",

messages=messages

)

assistant = chat_response.choices[0].message.content

print("Assistant:", assistant)

messages.append({"role": "assistant", "content": assistant})

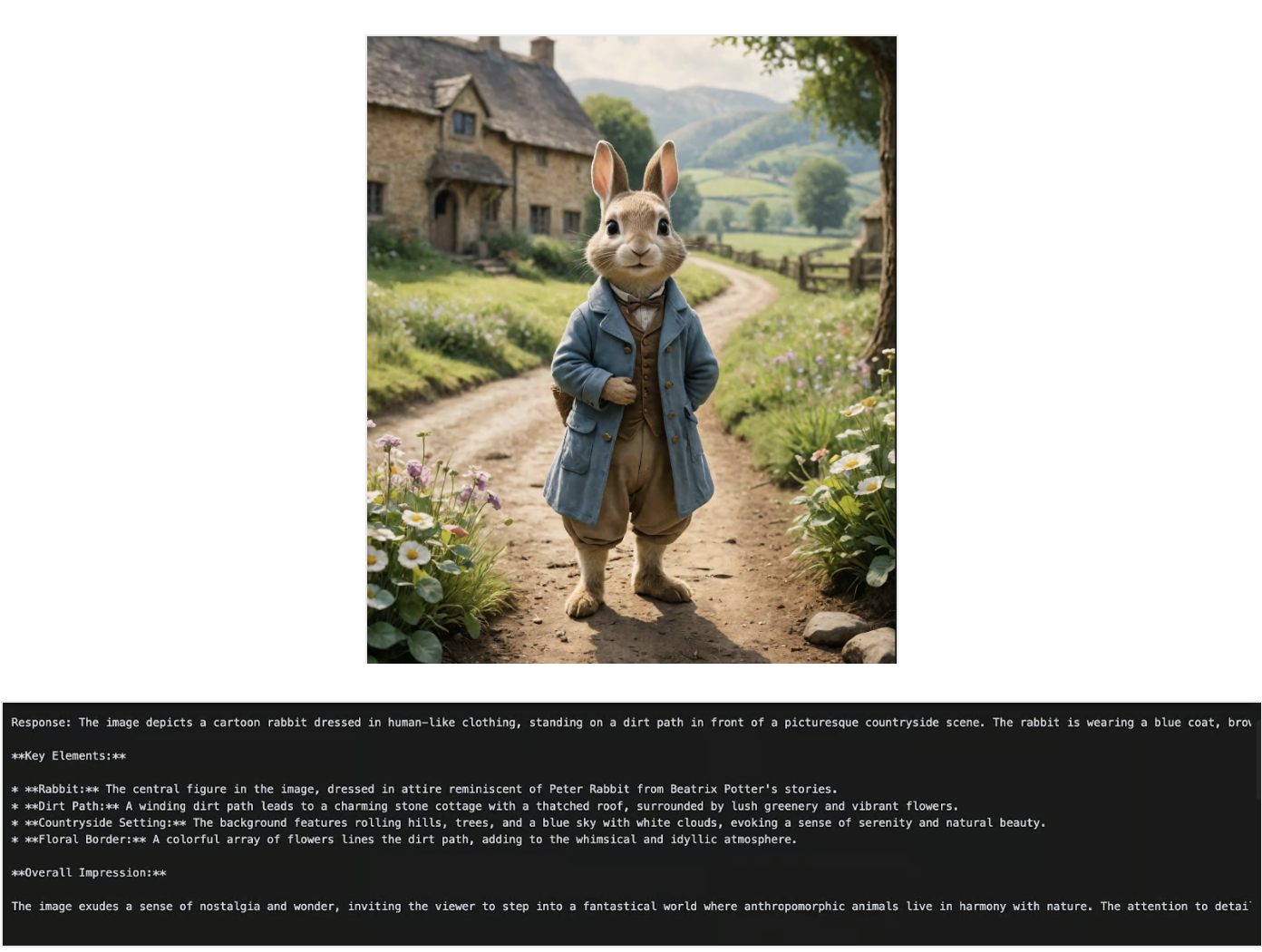

Multimodal Inference With LLaMA 4 Scout

Step 1: Install and Import

pip install openai

from openai import OpenAI

Step 2: Connect Client

client = OpenAI(

api_key="EMPTY",

base_url="http://localhost:8000/v1"

)

Step 3: Submit Multimodal Prompt

messages = [

{

"role": "user",

"content": [

{"type": "image_url", "image_url": {"url": image_url1}},

{"type": "text", "text": "Can you describe what's in this image?"}

]

}

]

chat_response = client.chat.completions.create(

model="meta-llama/Llama-4-Scout-17B-16E-Instruct",

messages=messages,

)

print("Response:", chat_response.choices[0].message.content)

📸 This will process image + text input and return a grounded visual answer!

Conclusion

In this guide, we:

- 🚀 Deployed LLaMA 4 Scout on RunPod with vLLM

- 💬 Built a terminal-based chatbot

- 🖼 Ran a multimodal Q&A demo

- 🧪 Explored OpenAI-compatible endpoints

LLaMA 4 with vLLM is ideal for:

- Long-context reasoning

- Multimodal assistants

- Cost-effective GPU deployment

Comments