Read the full article on DataCamp

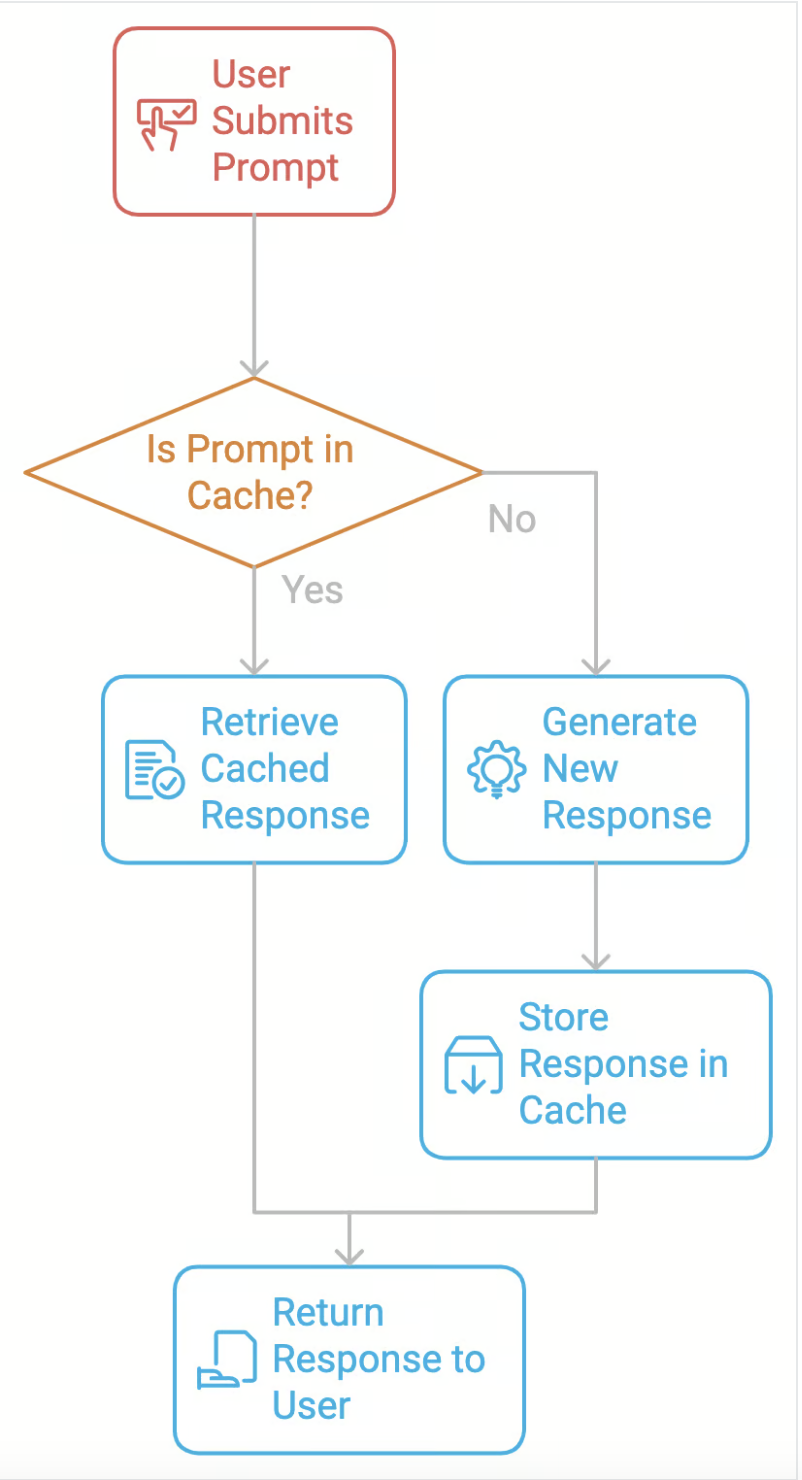

Learn what prompt caching is and how to use it with Ollama to optimize LLM interactions, reduce costs, and improve performance in AI apps.

Overview

This tutorial covers prompt caching, its benefits, and practical code examples for implementation using Ollama.

Article Details

Published: November 19, 2024

Read Time: 12 minutes

Click here to read the full article.

Comments