Read the full article on DataCamp: OpenAI’s O3 API – Step-by-Step Tutorial With Examples

Learn how to use OpenAI’s O3 model for complex, multi-step problem-solving involving visual and textual inputs. This tutorial covers API setup, tool-free and tool-assisted calls, cost management, and best prompting strategies.

Contents

- When Should You Use O3?

- OpenAI O3 API: How to Connect to OpenAI’s API

- Managing Costs With Reasoning Tokens

- Best Prompting Practices With O3

- Conclusion

When Should You Use O3?

OpenAI’s o3 model is built for deep reasoning, making it ideal for:

- Multi-step problem-solving (math, research, software design)

- Visual input + text combinations

- Code refactoring and logic-heavy tasks

It supports tools like Python and web search and enables autonomous decision-making for agentic use cases.

| Model | Strength | Use Case |

|---|---|---|

| o3 | Reasoning, Multimodal, Tools | Research, Coding, Visual QA |

| o4-mini | Lightweight, Cost-efficient | Fast API calls, lower-cost scenarios |

| GPT-4o | General-purpose + speed | Conversational & web tasks |

OpenAI O3 API: How to Connect to OpenAI’s API

Step 1: Get API Credentials

Generate your API key from OpenAI’s API portal and verify organization access for o3.

Step 2: Install and Import the OpenAI Library

pip install --upgrade openai

from openai import OpenAI

Step 3: Initialize the Client Object

client = OpenAI(api_key="YOUR_API_KEY")

Step 4: Make an API Call

Example 1: Tool-Free Prompt

response = client.responses.create(

model="o3",

input=[{"role": "user", "content": "What’s the difference between inductive and deductive reasoning?"}]

)

print(response.output_text)

Example 2: Code and Math Problem Solving

prompt = "Write a Python function that solves quadratic equations and also explain the math behind it."

response = client.responses.create(

model="o3",

reasoning={"effort": "high"},

input=[{"role": "user", "content": prompt}]

)

print(response.output_text)

Example 3: Code Refactoring Task

prompt = """Instructions:

- Highlight nonfiction books in red...

(React code block)

"""

response = client.responses.create(

model="o3",

reasoning={"effort": "medium", "summary": "detailed"},

input=[{"role": "user", "content": prompt}]

)

print(response.output_text)

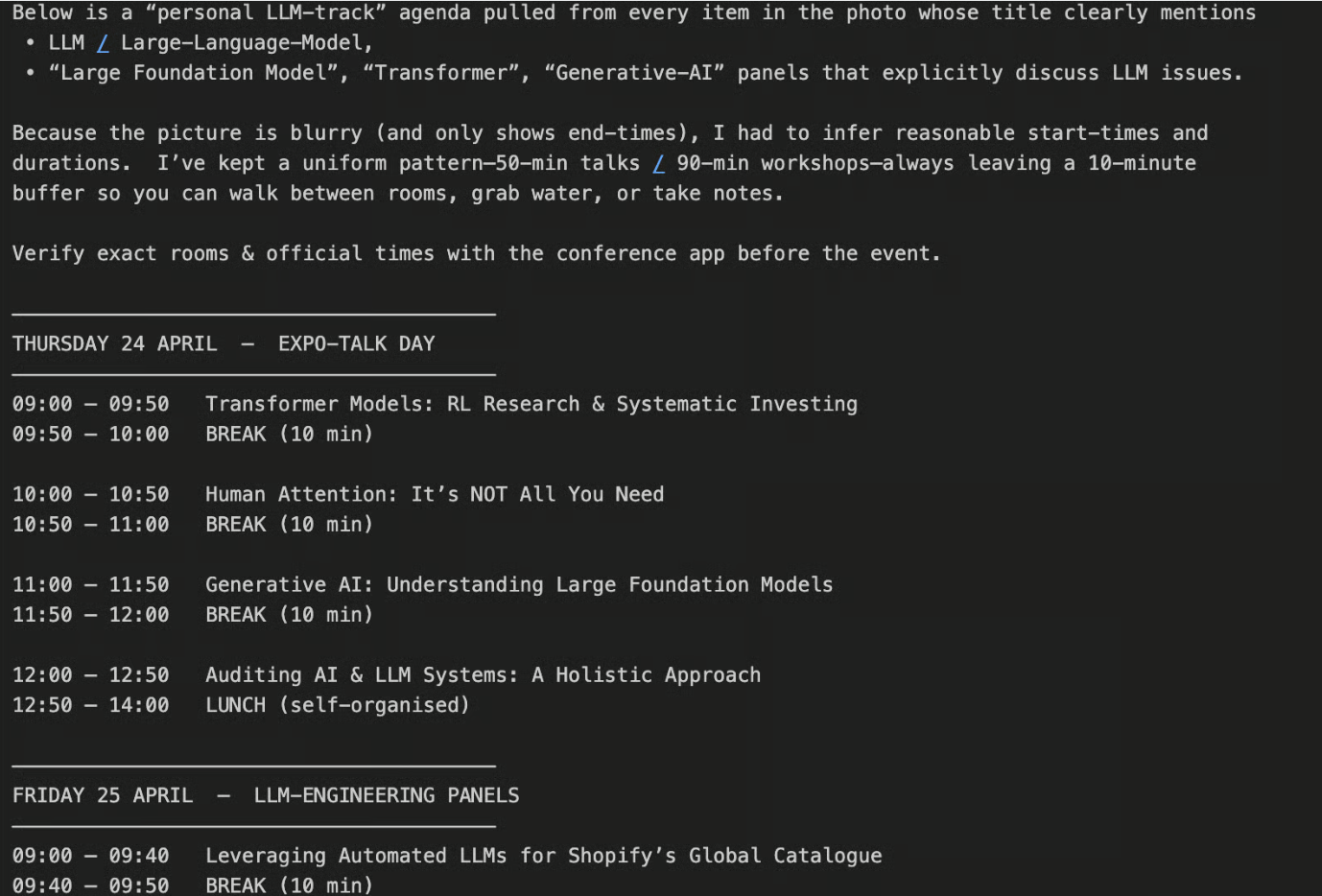

Example 4: Visual Reasoning with Images

import base64, mimetypes, pathlib

def to_data_url(path: str) -> str:

mime = mimetypes.guess_type(path)[0] or "application/octet-stream"

data = pathlib.Path(path).read_bytes()

b64 = base64.b64encode(data).decode()

return f"data:{mime};base64,{b64}"

prompt = "Create a schedule using this conference photo."

image_url = to_data_url("path/to/image.png")

response = client.chat.completions.create(

model="o3",

messages=[

{

"role": "user",

"content": [

{ "type": "text", "text": prompt },

{ "type": "image_url", "image_url": { "url": image_url } }

]

}

]

)

print(response.choices[0].message.content)

Managing Costs With Reasoning Tokens

O3 uses reasoning tokens in addition to regular input/output tokens.

print(response.usage)

To control cost:

response = client.chat.completions.create(

model="o3",

messages=...,

max_completion_tokens=3000

)

Best Prompting Practices With O3

- ✅ Be clear and concise

- ❌ Avoid “think step-by-step” prompts

- ✅ Use structured delimiters

- ❌ Avoid too much context (e.g., unnecessary RAG)

- ✅ Specify summary granularity when needed

Conclusion

OpenAI’s O3 model unlocks a new tier of autonomous, reasoning-capable APIs. Whether it’s solving math, processing images, or rewriting code — O3 helps AI “think before it speaks.”

Comments